Data Migration Strategies to the Cloud: From First Byte to Final Cutover

Chosen theme: Data Migration Strategies to the Cloud. Welcome to a practical, story-rich guide for leaders and builders planning cloud-bound data journeys. Subscribe for fresh playbooks, checklists, and real-world lessons that turn complex migrations into confident, low-risk transitions.

Choosing the Right Migration Approach

Big-bang versus phased cutovers

Big-bang migrations deliver a decisive switch with a short, predictable outage, best for smaller or self-contained datasets. Phased cutovers de-risk the journey by migrating domains in waves, preserving rollback options and enabling learning between iterations for steadily improving outcomes.

Batch loads or change data capture streaming

Batch loads excel at backfilling historical data with high predictability, yet struggle with freshness during long runs. Change data capture streams deltas continuously, preserving order and enabling near-zero downtime cutovers when business-critical systems cannot tolerate prolonged sync gaps.

Anecdote: A retailer’s weekend move

A mid-market retailer migrated ten years of order history via staged batch loads, then streamed changes for forty-eight hours. Monday morning, analytics reconciled within 0.05 percent variance, refunds reconciled perfectly, and the CIO sent cupcakes to the war room. What would your ideal cutover window be?

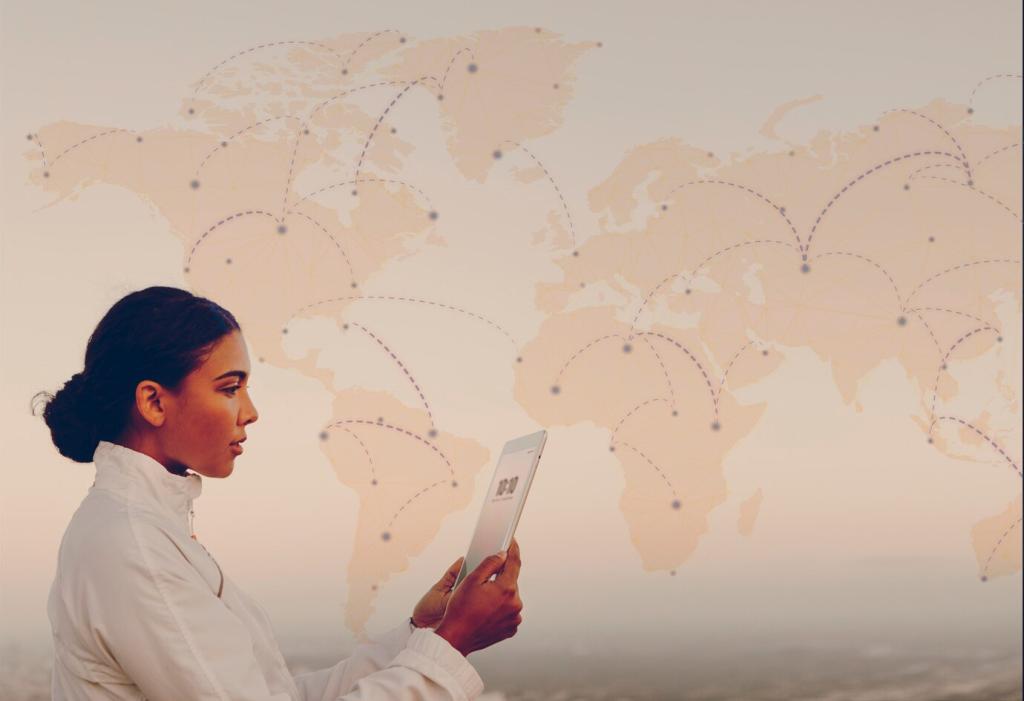

Data Transfer Paths: Online, Offline, and Hybrid

Online paths leverage site-to-site VPN or dedicated links like AWS Direct Connect, Azure ExpressRoute, or Google Cloud Interconnect. Parallel streams, multipart uploads, and tuned window sizes lift throughput, while TLS in transit and private endpoints keep sensitive records protected end to end.

Integrity First: Validation and Reconciliation

Start simple: row counts, checksums, and primary key uniqueness across source and target. Add stratified sampling for large tables, comparing high-value segments like recent transactions. Automate reports so stakeholders see discrepancies quickly, not days later when decisions depend on the numbers.

Integrity First: Validation and Reconciliation

Operate old and new systems in parallel for a defined window. Mirror critical reads, compare results within tolerance bands, and alert on drifts. Business users gain confidence by validating real workflows, not synthetic tests, before you flip traffic entirely to the cloud destination.

Enforce TLS in transit, with server-side or client-side encryption at rest. Centralize keys in KMS or HSM-backed systems, rotate regularly, and separate duties. For exports, verify manifests and encryption context, ensuring lost devices or intercepted packets remain unreadable forever.

Security and Compliance During Migration

This is the heading

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

This is the heading

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

People, Process, and Change Management

Set crisp cadences: daily checkpoints during prep, hourly updates during cutover, and a final go or no-go gate. Publish a single source of truth, define escalation paths, and ensure executives understand trade-offs between downtime, risk tolerance, and verification rigor.

People, Process, and Change Management

Run hands-on labs for tools, data models, and playbooks. Use sandbox environments to practice failure modes and recovery steps. Cross-train analysts and engineers so validation, lineage, and performance tuning are shared responsibilities rather than siloed, last-minute heroics.

After the Cutover: Stabilize, Observe, and Improve

Confirm end-to-end reconciliations, access policies, and backup regimes. Retire duplicate pipelines and legacy exports to reduce risk and cost. Document the new source of truth explicitly so downstream teams do not accidentally revive the old system during busy releases.

After the Cutover: Stabilize, Observe, and Improve

Track freshness, completeness, and schema drift with alerts tied to business SLOs. Add lineage so owners see upstream failures quickly. Instrument pipelines with standardized metrics, and include run IDs in logs to correlate issues across ingestion, transformation, and serving layers.